library(tidyverse)

library(patchwork)

draw_and_compare <- function(true_slope, true_int, sample_size) {

# 1. Create some syntheic data

x <- rnorm(sample_size, mean = 50, sd = 5)

eps <- rnorm(sample_size, mean = 0, sd = 6)

y <- true_slope * x + true_int + eps

df <- tibble(x, y)

xlims <- range(x)

# 2. Fit regression line

fit <- lm(y ~ x, data = df)

coef_fit <- coef(fit) # grab coefficients

a_hat <- coef_fit[1]

b_hat <- coef_fit[2]

true_line <- tibble(x = xlims, y = true_slope * xlims + true_int)

fit_line <- tibble(x = xlims, y = a_hat + b_hat * xlims)

# Plot 1: True line and points created

p1 <- ggplot(df, aes(x, y)) +

geom_point(alpha = 0.7) +

geom_line(data = true_line, aes(x, y), color = "forestgreen", linewidth = 1) +

labs(title = "True Line, and Points Created",

x = NULL, y = NULL) # set axis labels to NULL to remove them

# they are redundant here.

# Plot 2: What we get to see

p2 <- ggplot(df, aes(x, y)) +

geom_point(alpha = 0.7) +

labs(title = "What We Get to See", x = NULL, y = NULL)

# Plot 3: Regression line as estimate

p3 <- ggplot(df, aes(x, y)) +

geom_point(alpha = 0.7) +

geom_line(data = fit_line, aes(x, y), color = "goldenrod2", linewidth = 1) +

labs(title = "Regression Line: Estimate of True Line",

x = NULL, y = NULL)

# Plot 4: Regression line vs true line

p4 <- ggplot(df, aes(x, y)) +

geom_point(alpha = 0.7) +

geom_line(data = fit_line, aes(x, y), color = "goldenrod2", linewidth = 1) +

geom_line(data = true_line, aes(x, y), color = "forestgreen", linewidth = 1) +

labs(title = "Regression Line and True Line",

x = NULL, y = NULL)

# Combine vertically, center titles, and return one plot

(p1 / p2 / p3 / p4) & theme(plot.title = element_text(hjust = 0.5))

}Inference of Regression

This module was adapted from the chapter in the Data 8 textbook.

1 Inference for Regression

Thus far, our analysis of the relation between variables has been purely descriptive. We know how to find the best straight line to draw through a scatter plot. The line is the best in the sense that it has the smallest mean squared error of estimation among all straight lines.

But what if our data were only a sample from a larger population? If in the sample we found a linear relation between the two variables, would the same be true for the population? Would it be exactly the same linear relation? Could we predict the response of a new individual who is not in our sample?

Such questions of inference and prediction arise if we believe that a scatter plot reflects the underlying relation between the two variables being plotted but does not specify the relation completely. For example, a scatter plot of birth weight versus gestational days shows us the precise relation between the two variables in our sample; but we might wonder whether that relation holds true, or almost true, for all babies in the population from which the sample was drawn, or indeed among babies in general.

As always, inferential thinking begins with a careful examination of the assumptions about the data. Sets of assumptions are known as models. Sets of assumptions about randomness in roughly linear scatter plots are called regression models.

1.1 A Regression Model

In brief, such models say that the underlying relation between the two variables is perfectly linear; this straight line is the signal that we would like to identify. However, we are not able to see the line clearly. What we see are points that are scattered around the line. In each of the points, the signal has been contaminated by random noise. Our inferential goal, therefore, is to separate the signal from the noise.

In greater detail, the regression model specifies that the points in the scatter plot are generated at random as follows.

- The relation between \(x\) and \(y\) is perfectly linear. We cannot see this “true line” but it exists.

- The scatter plot is created by taking points on the line and pushing them off the line vertically, either above or below, as follows:

- For each \(x\), find the corresponding point on the true line (that’s the signal), and then generate the noise or error.

- The errors are drawn at random with replacement from a population of errors that has a normal distribution with mean 0.

- Create a point whose horizontal coordinate is \(x\) and whose vertical coordinate is “the height of the true line at \(x\), plus the error”.

- Finally, erase the true line from the scatter, and display just the points created.

Based on this scatter plot, how should we estimate the true line? The best line that we can put through a scatter plot is the regression line. So the regression line is a natural estimate of the true line.

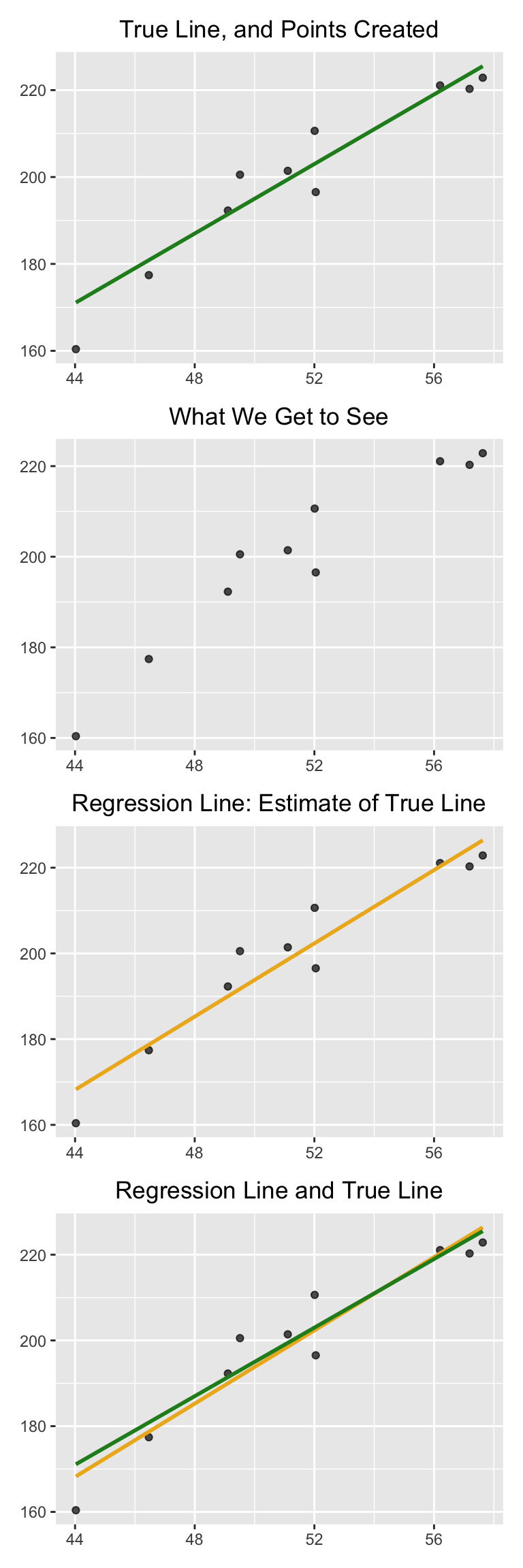

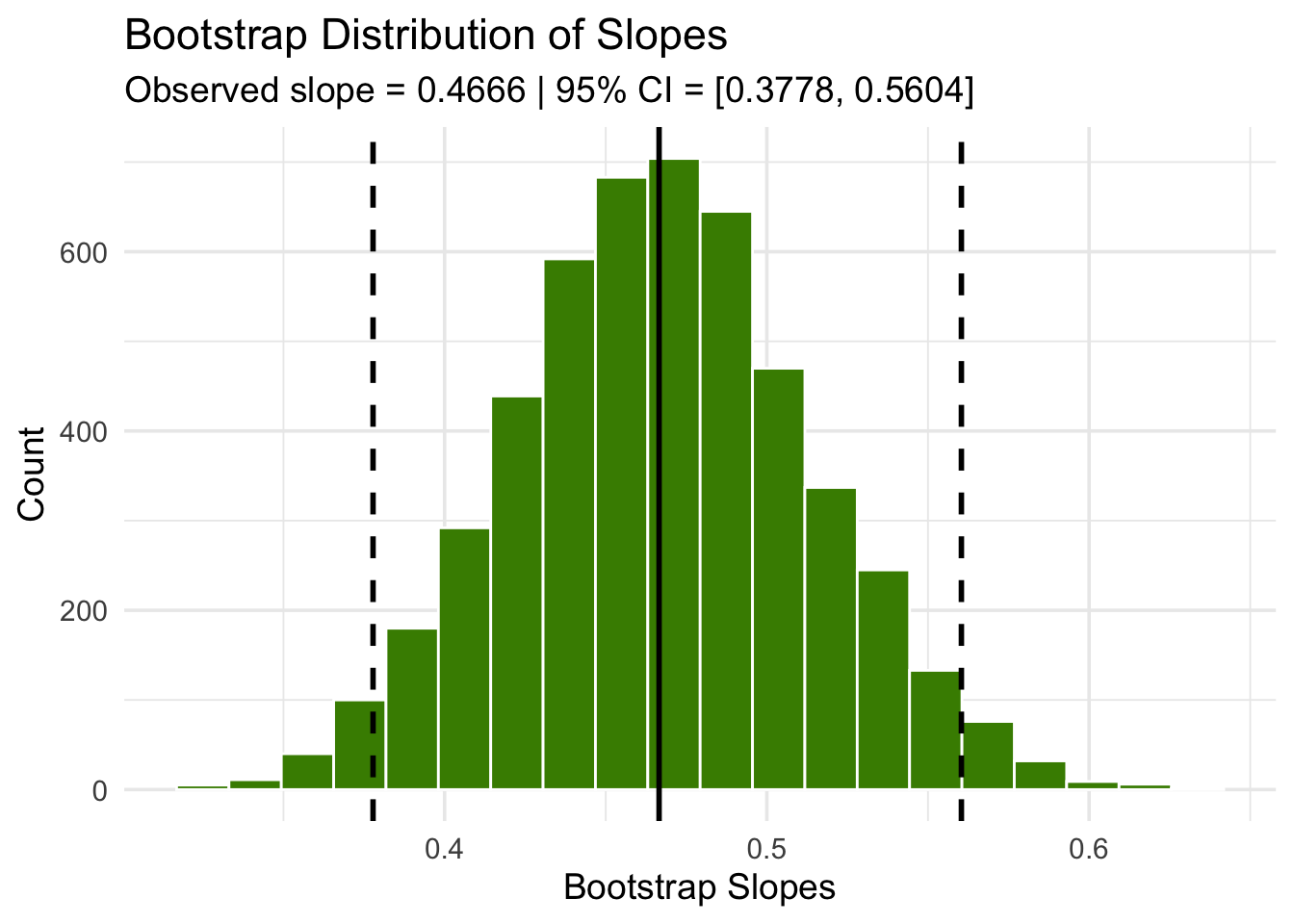

The simulation below shows how close the regression line is to the true line. The first panel shows how the scatter plot is generated from the true line. The second shows the scatter plot that we see. The third shows the regression line through the plot. The fourth shows both the regression line and the true line.

To run the simulation, call the function draw_and_compare with three arguments: the slope of the true line, the intercept of the true line, and the sample size.

Run the simulation a few times, with different values for the slope and intercept of the true line, and varying sample sizes. Because all the points are generated according to the model, you will see that the regression line is a good estimate of the true line if the sample size is moderately large.

Let’s call this function to simulate noisy data with a slope of 4, intercept of -5, and 10 points.

# The true line,

# the points created,

# and our estimate of the true line.

# Arguments: true slope, true intercept, number of points

# Example call:

draw_and_compare(4, -5, 10)

In reality, of course, we will never see the true line. What the simulation shows that if the regression model looks plausible, and if we have a large sample, then the regression line is a good approximation to the true line.

1.2 Inference for the True Slope

Our simulations show that if the regression model holds and the sample size is large, then the regression line is likely to be close to the true line. This allows us to estimate the slope of the true line.

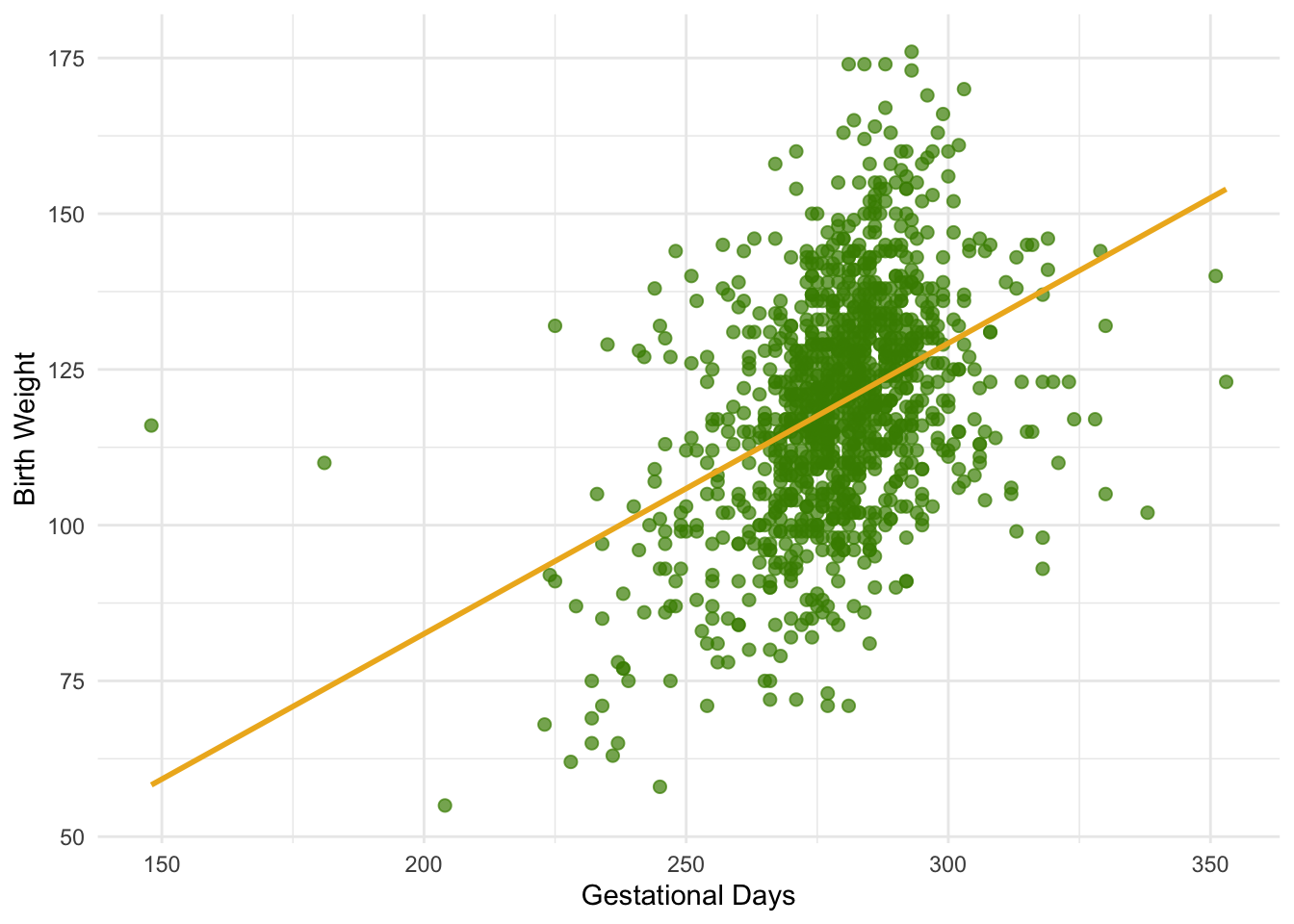

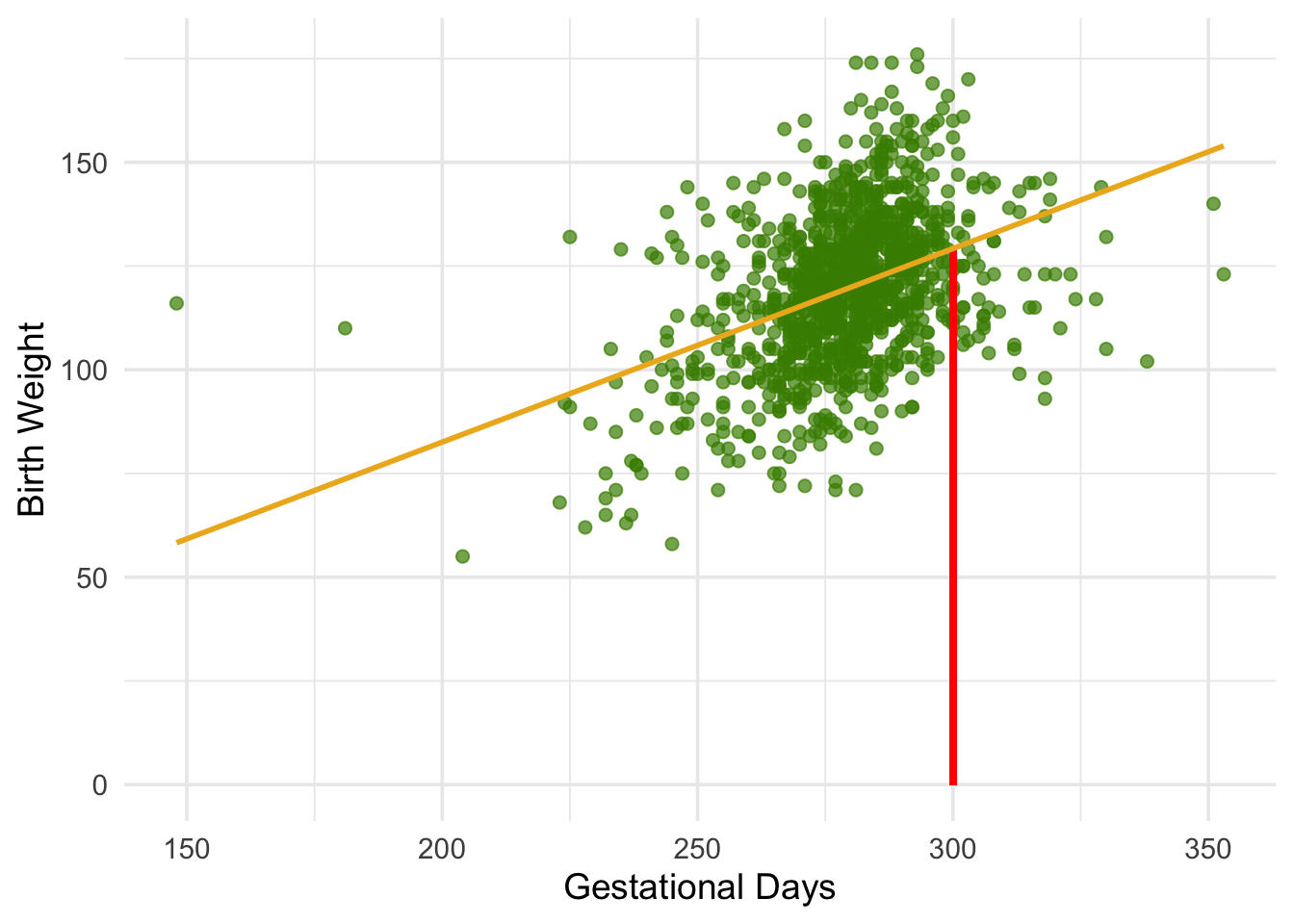

We will use our familiar sample of mothers and their newborn babies to develop a method of estimating the slope of the true line. First, let’s see if we believe that the regression model is an appropriate set of assumptions for describing the relation between birth weight and the number of gestational days. You can download the dataset here.

baby = read_csv('data/baby.csv')Code

ggplot(baby, aes(x = `Gestational Days`, y = `Birth Weight`)) +

geom_point(size = 2, alpha = 0.7, color = "chartreuse4") +

geom_smooth(method = "lm", se = FALSE, color = "goldenrod2", linewidth = 1) +

labs(

x = "Gestational Days",

y = "Birth Weight"

) +

theme_minimal()

Observed statistics (correlation and slope)

summary <- baby |>

summarize(

r = cor(`Gestational Days`, `Birth Weight`),

slope = r * sd(`Birth Weight`) / sd(`Gestational Days`)

)

summary# A tibble: 1 × 2

r slope

<dbl> <dbl>

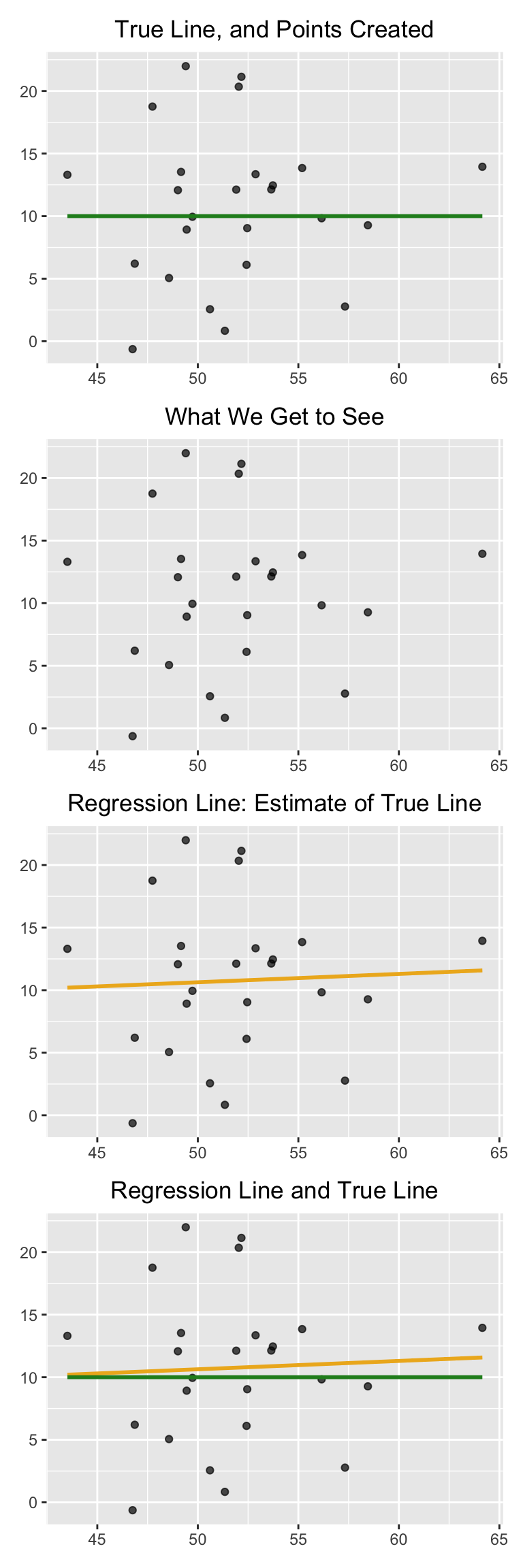

1 0.408 0.467By and large, the scatter looks fairly evenly distributed around the line, though there are some points that are scattered on the outskirts of the main cloud. The correlation is 0.4 and the regression line has a positive slope.

summary$r[1] 0.4075428Does this reflect the fact that the true line has a positive slope? To answer this question, let us see if we can estimate the true slope. We certainly have one estimate of it: the slope of our regression line. That’s about 0.47 ounces per day.

obs_slope <- summary$slope

obs_slope[1] 0.4665569But had the scatter plot come out differently, the regression line would have been different and might have had a different slope. How do we figure out how different the slope might have been?

We need another sample of points, so that we can draw the regression line through the new scatter plot and find its slope. But from where will get another sample?

You have guessed it – we will bootstrap our original sample. That will give us a bootstrapped scatter plot, through which we can draw a regression line.

1.2.1 Bootstrapping the Scatter Plot

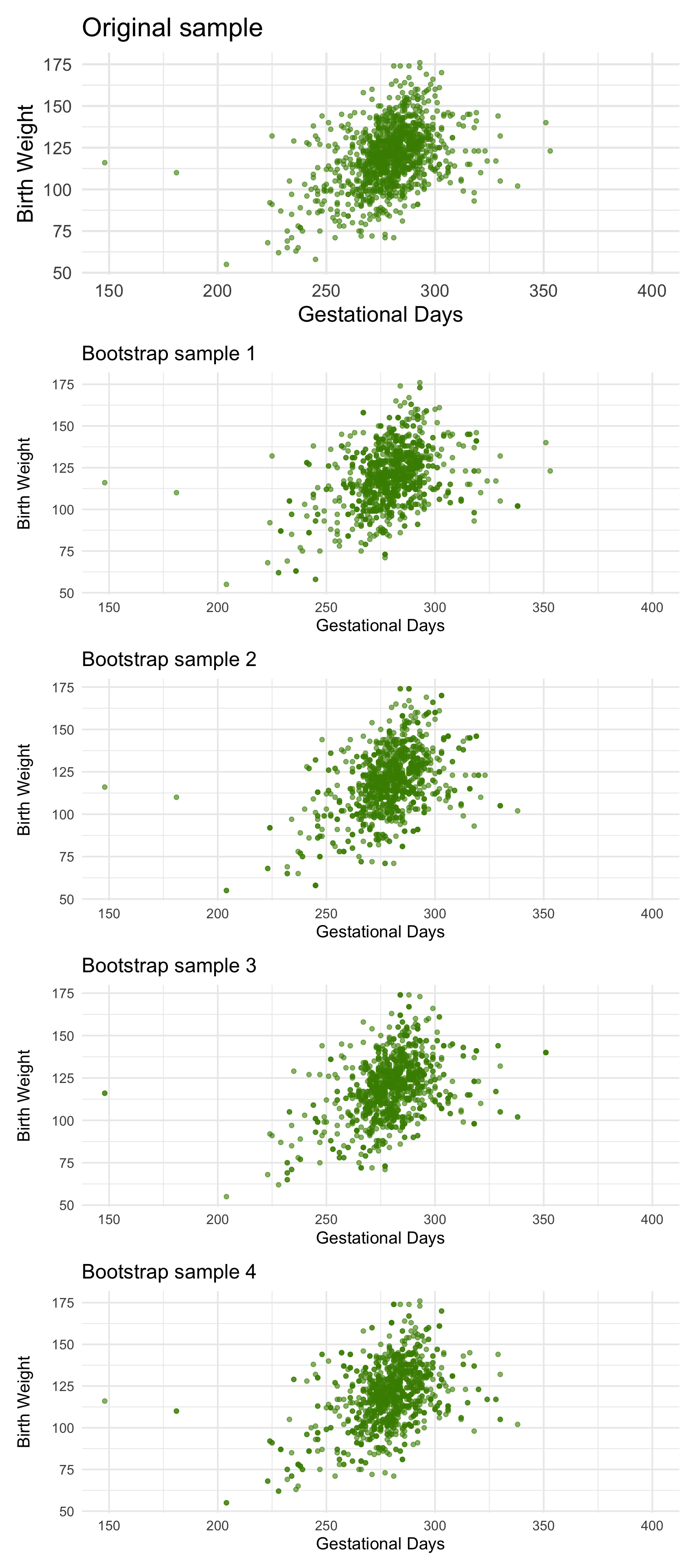

We can simulate new samples by random sampling with replacement from the original sample, as many times as the original sample size. Each of these new samples will give us a scatter plot. We will call that a bootstrapped scatter plot, and for short, we will call the entire process bootstrapping the scatter plot.

Here is the original scatter diagram from the sample, and four replications of the bootstrap resampling procedure. Notice how the resampled scatter plots are in general a little more sparse than the original. That is because some of the original points do not get selected in the samples.

1.2.2 Estimating the True Slope

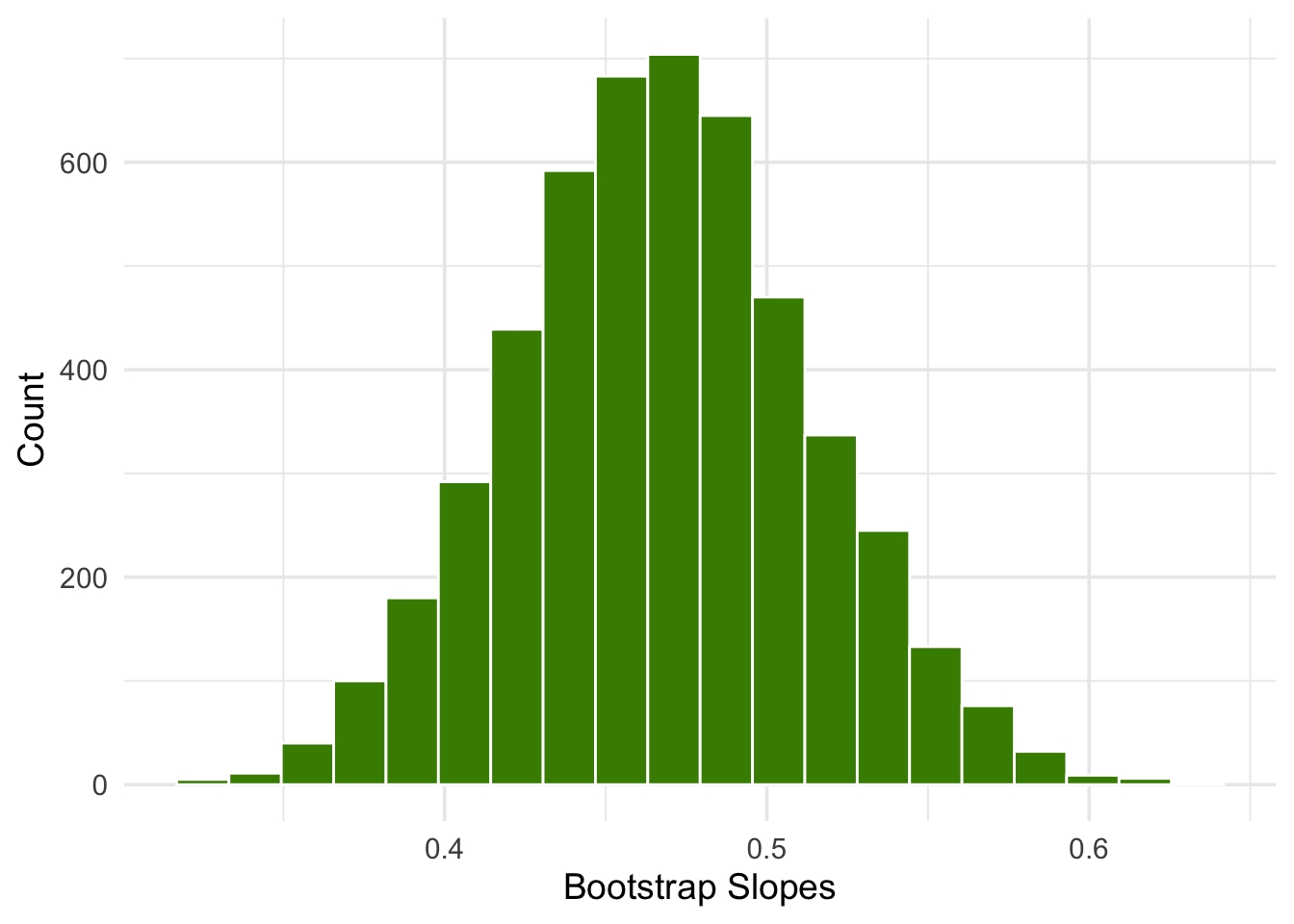

We can bootstrap the scatter plot a large number of times, and draw a regression line through each bootstrapped plot. Each of those lines has a slope. We can simply collect all the slopes and draw their empirical histogram. Recall that by default, the sample method draws at random with replacement, the same number of times as the number of rows in the table. That is, sample generates a bootstrap sample by default.

library(tidymodels)

boot_dist <- baby |>

specify(`Birth Weight` ~ `Gestational Days`) |>

generate(reps = 5000, type = "bootstrap") |>

calculate(stat = "slope")

boot_dist |>

ggplot(aes(x = stat)) +

geom_histogram(bins = 20, fill = "chartreuse4", color = "white") +

labs(x = "Bootstrap Slopes", y = "Count") +

theme_minimal(base_size = 14)

We can then construct an approximate 95% confidence interval for the slope of the true line, using the bootstrap percentile method. The confidence interval extends from the 2.5th percentile to the 97.5th percentile of the 5000 bootstrapped slopes.

# A tibble: 1 × 2

lower upper

<dbl> <dbl>

1 0.378 0.560or

boot_dist |>

get_ci(level = 0.95, type = "percentile")# A tibble: 1 × 2

lower_ci upper_ci

<dbl> <dbl>

1 0.378 0.560An approximate 95% confidence interval for the true slope extends from about 0.38 ounces per day to about 0.56 ounces per day.

Code

ci <- boot_dist |>

get_ci(level = 0.95, type = "percentile")

left <- ci$lower_ci

right <- ci$upper_ci

boot_dist |>

ggplot(aes(x = stat)) +

geom_histogram(bins = 20, fill = "chartreuse4", color = "white") +

geom_vline(xintercept = c(left, right), linetype = "dashed", linewidth = 1) +

geom_vline(xintercept = obs_slope, linewidth = 1) +

labs(

title = "Bootstrap Distribution of Slopes",

x = "Bootstrap Slopes",

y = "Count",

subtitle = paste0(

"Observed slope = ", signif(obs_slope, 4),

" | 95% CI = [", signif(left, 4), ", ", signif(right, 4), "]"

)

) +

theme_minimal(base_size = 14)

A 95% confidence interval for the true slope extends from about 1 ounce per inch to about 1.9 ounces per inch.

1.2.3 Could the True Slope Be 0?

Suppose we believe that our data follow the regression model, and we fit the regression line to estimate the true line. If the regression line isn’t perfectly flat, as is almost invariably the case, we will be observing some linear association in the scatter plot.

But what if that observation is spurious? In other words, what if the true line was flat – that is, there was no linear relation between the two variables – and the association that we observed was just due to randomness in generating the points that form our sample?

Here is a simulation that illustrates why this question arises. We will once again call the function draw_and_compare, this time requiring the true line to have slope 0. Our goal is to see whether our regression line shows a slope that is not 0.

Remember that the arguments to the function draw_and_compare are the slope and the intercept of the true line, and the number of points to be generated.

draw_and_compare(0, 10, 25)

Run the simulation a few times, keeping the slope of the true line 0 each time. You will notice that while the slope of the true line is 0, the slope of the regression line is typically not 0. The regression line sometimes slopes upwards, and sometimes downwards, each time giving us a false impression that the two variables are correlated.

To decide whether or not the slope that we are seeing is real, we would like to test the following hypotheses:

Null Hypothesis.

The slope of the true line is 0.

Alternative Hypothesis.

The slope of the true line is not 0.

We are well positioned to do this. Since we can construct a 95% confidence interval for the true slope, all we have to do is see whether the interval contains 0.

If it doesn’t, then we can reject the null hypothesis (with the 5% cutoff for the P-value).

If the confidence interval for the true slope does contain 0, then we don’t have enough evidence to reject the null hypothesis. Perhaps the slope that we are seeing is spurious.

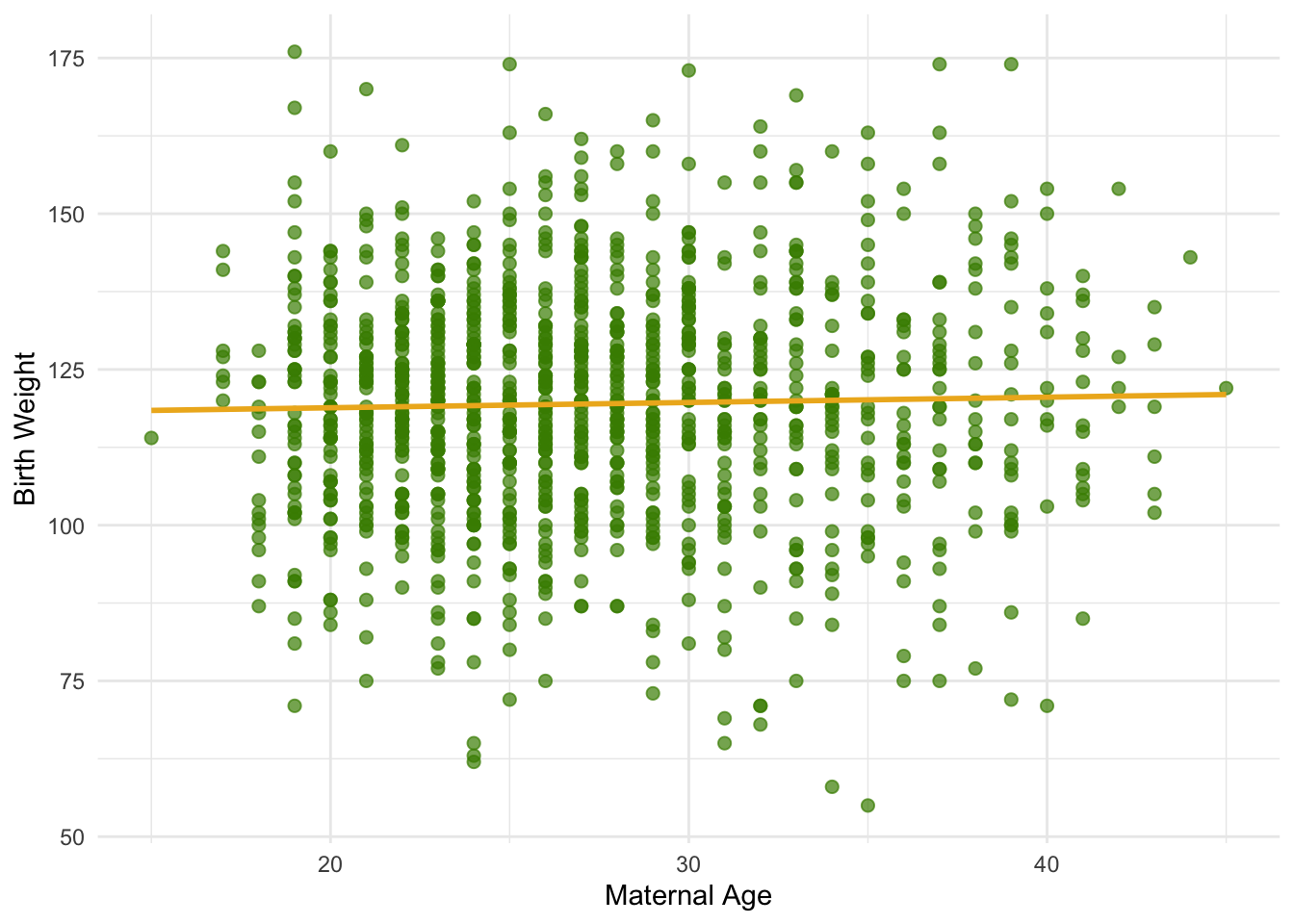

Let’s use this method in an example. Suppose we try to estimate the birth weight of the baby based on the mother’s age. Based on the sample, the slope of the regression line for estimating birth weight based on maternal age is positive, about 0.08 ounces per year.

summary <- baby |>

summarize(

r = cor(`Maternal Age`, `Birth Weight`),

slope = r * sd(`Birth Weight`) / sd(`Maternal Age`)

)

summary# A tibble: 1 × 2

r slope

<dbl> <dbl>

1 0.0270 0.0850Though the slope is positive, it’s pretty small. The regression line is so close to flat that it raises the question of whether the true line is flat.

Code

ggplot(baby, aes(x = `Maternal Age`, y = `Birth Weight`)) +

geom_point(size = 2, alpha = 0.7, color = "chartreuse4") +

geom_smooth(method = "lm", se = FALSE, color = "goldenrod2", linewidth = 1) +

labs(

x = "Maternal Age",

y = "Birth Weight"

) +

theme_minimal()

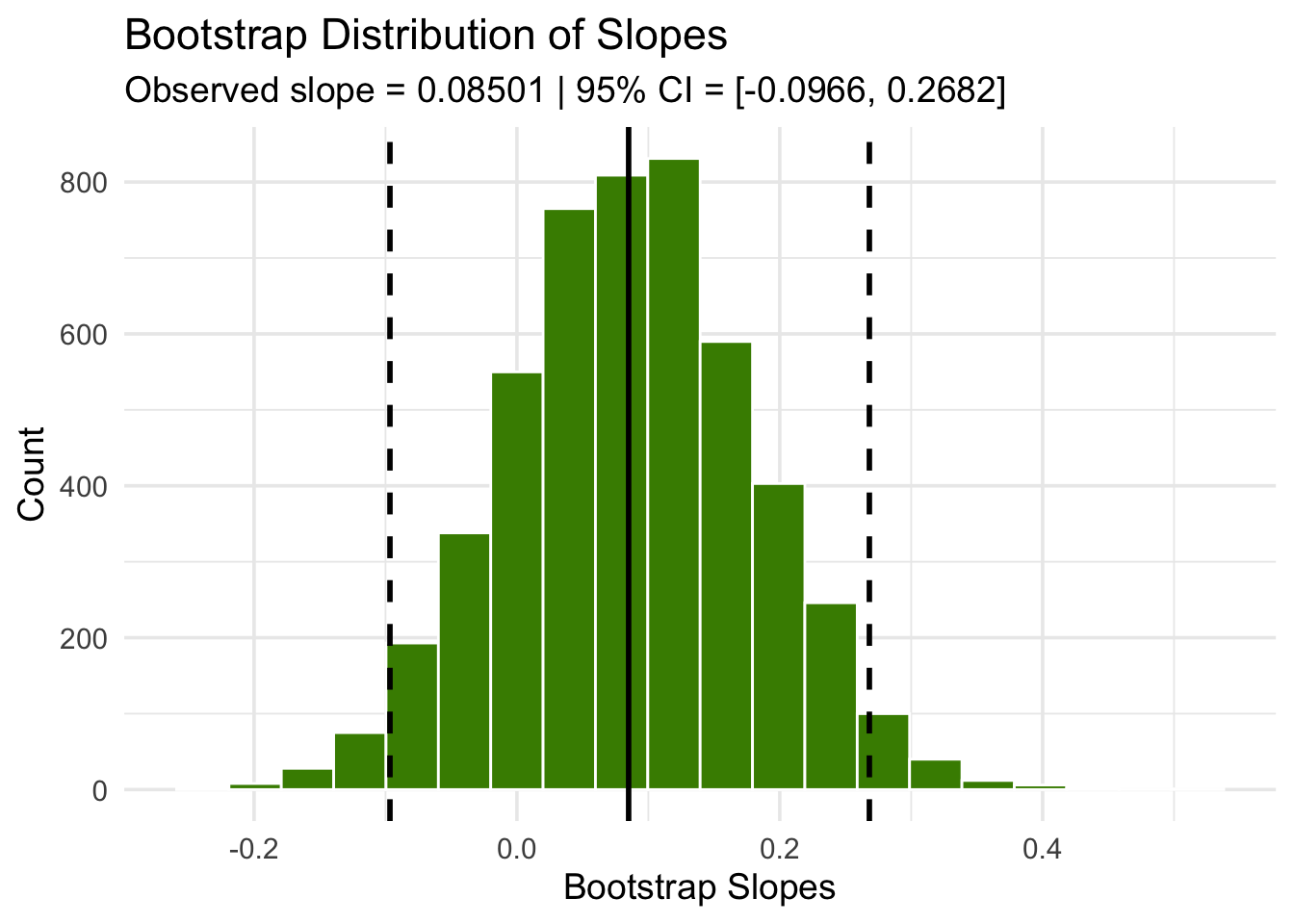

We can use our bootstrap framework to estimate the slope of the true line. The calculation shows that an approximate 95% bootstrap confidence interval for the true slope has a negative left end point and a positive right end point – in other words, the interval contains 0.

boot_dist <- baby |>

specify(`Birth Weight` ~ `Maternal Age`) |>

generate(reps = 5000, type = "bootstrap") |>

calculate(stat = "slope")

ci <- boot_dist |>

get_ci(level = 0.95, type = "percentile")

Because the interval contains 0, we cannot reject the null hypothesis that the slope of the true linear relation between maternal age and baby’s birth weight is 0. Based on this analysis, it would be unwise to predict birth weight based on the regression model with maternal age as the predictor.

1.3 Prediction Intervals

One of the primary uses of regression is to make predictions for a new individual who was not part of our original sample but is similar to the sampled individuals. In the language of the model, we want to estimate \(y\) for a new value of \(x\).

Our estimate is the height of the true line at \(x\). Of course, we don’t know the true line. What we have as a substitute is the regression line through our sample of points.

The fitted value at a given value of \(x\) is the regression estimate of \(y\) based on that value of \(x\). In other words, the fitted value at a given value of \(x\) is the height of the regression line at that \(x\).

Suppose we try to predict a baby’s birth weight based on the number of gestational days. As we saw in the previous section, the data fit the regression model fairly well and a 95% confidence interval for the slope of the true line doesn’t contain 0. So it seems reasonable to carry out our prediction.

The figure below shows where the prediction lies on the regression line. The red line is at \(x = 300\).

The height of the point where the red line hits the regression line is the fitted value at 300 gestational days.

The function lm() fits a linear model relating Birth Weight to Gestational Days, and predict() computes the fitted (predicted) value of \(\widehat{y}\) at a given \(x\).

In this example, we fit the model and then predict the height of the regression line at 300 gestational days.

# Fit the linear model

model <- lm(`Birth Weight` ~ `Gestational Days`, data = baby)

# Compute fitted value at x = 300

predict(model, newdata = tibble(`Gestational Days` = 300)) 1

129.2129 The fitted value at 300 gestational days is about 129.2 ounces. In other words, for a pregnancy that has a duration of 300 gestational days, our estimate for the baby’s weight is about 129.2 ounces.

1.3.1 The Variability of the Prediction

We have developed a method making one prediction of a new baby’s birth weight based on the number of gestational days, using the data in our sample. But as data scientists, we know that the sample might have been different. Had the sample been different, the regression line would have been different too, and so would our prediction. To see how good our prediction is, we must get a sense of how variable the prediction can be.

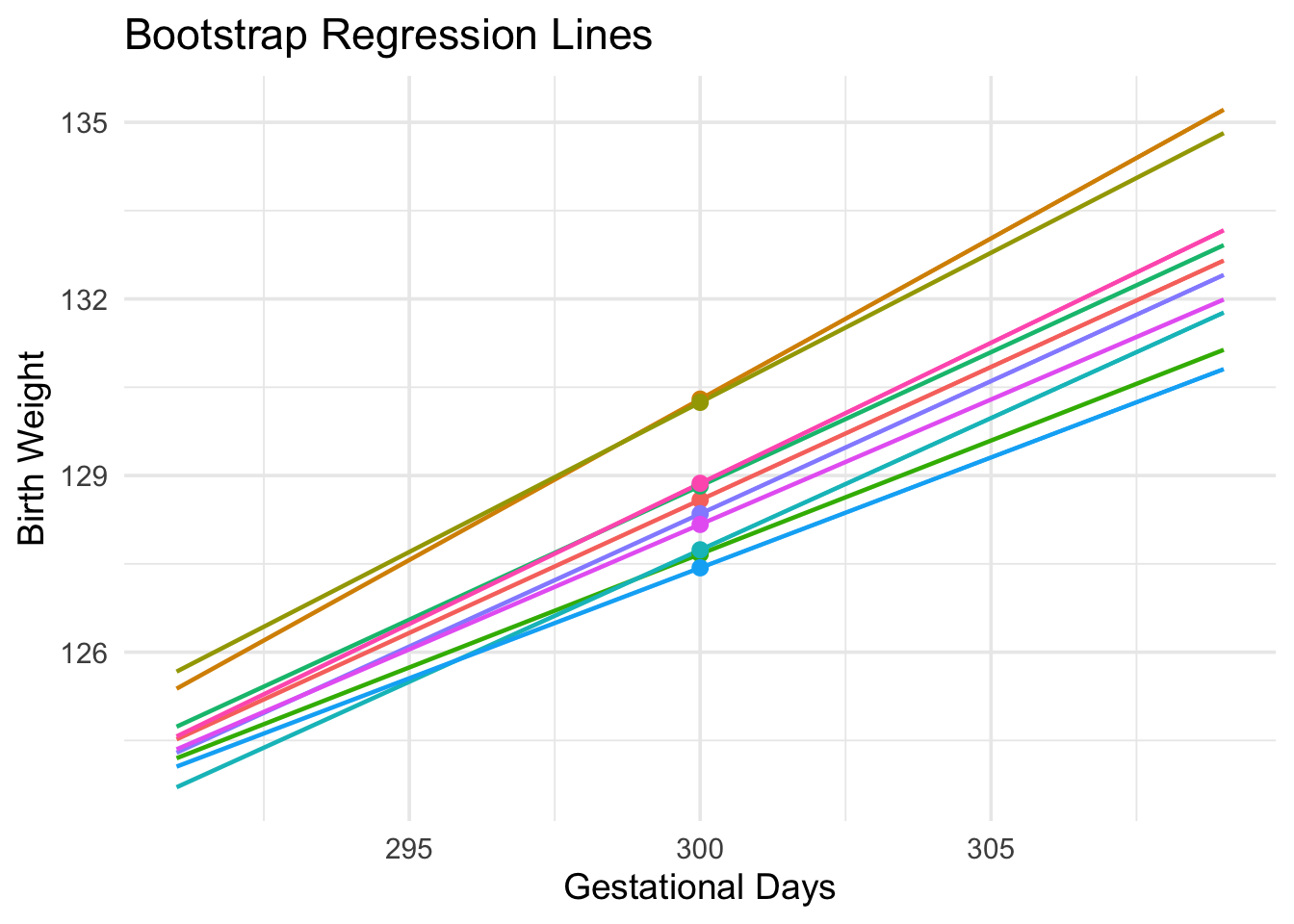

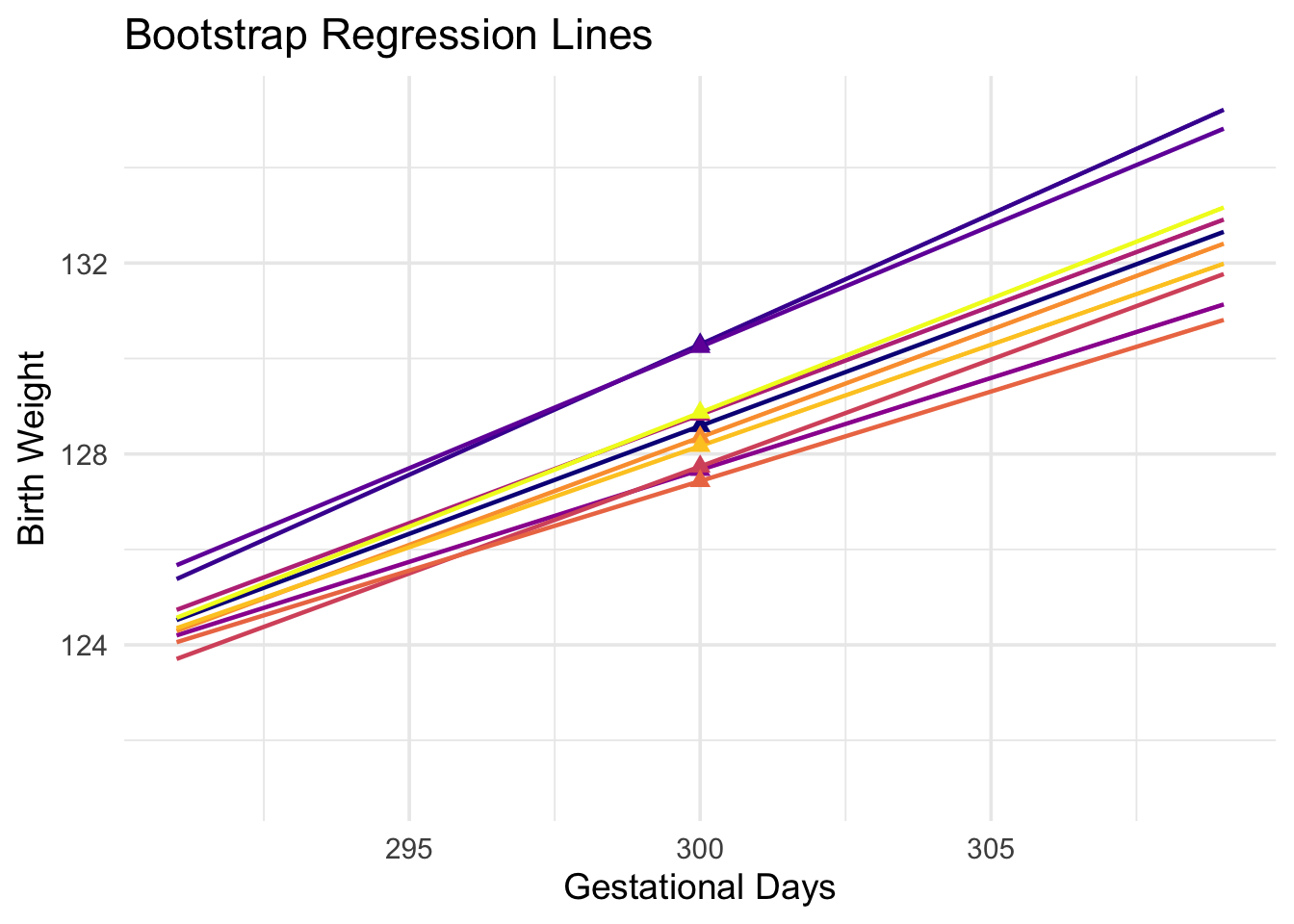

To do this, we must generate new samples. We can do that by bootstrapping the scatter plot as in the previous section. We will then fit the regression line to the scatter plot in each replication, and make a prediction based on each line. The figure below shows 10 such lines, and the corresponding predicted birth weight at 300 gestational days.

The predictions vary from one line to the next. The table below shows the slope and intercept of each of the 10 lines, along with the prediction.

lines_df| slope | intercept | prediction at x=300 |

|---|---|---|

| 0.452 | -6.982 | 128.587 |

| 0.546 | -33.628 | 130.297 |

| 0.508 | -22.206 | 130.243 |

| 0.385 | 12.050 | 127.667 |

| 0.454 | -7.504 | 128.824 |

| 0.448 | -6.682 | 127.738 |

| 0.375 | 14.861 | 127.433 |

| 0.451 | -6.931 | 128.351 |

| 0.425 | 0.819 | 128.170 |

| 0.478 | -14.449 | 128.868 |

1.3.2 Bootstrap Prediction Interval

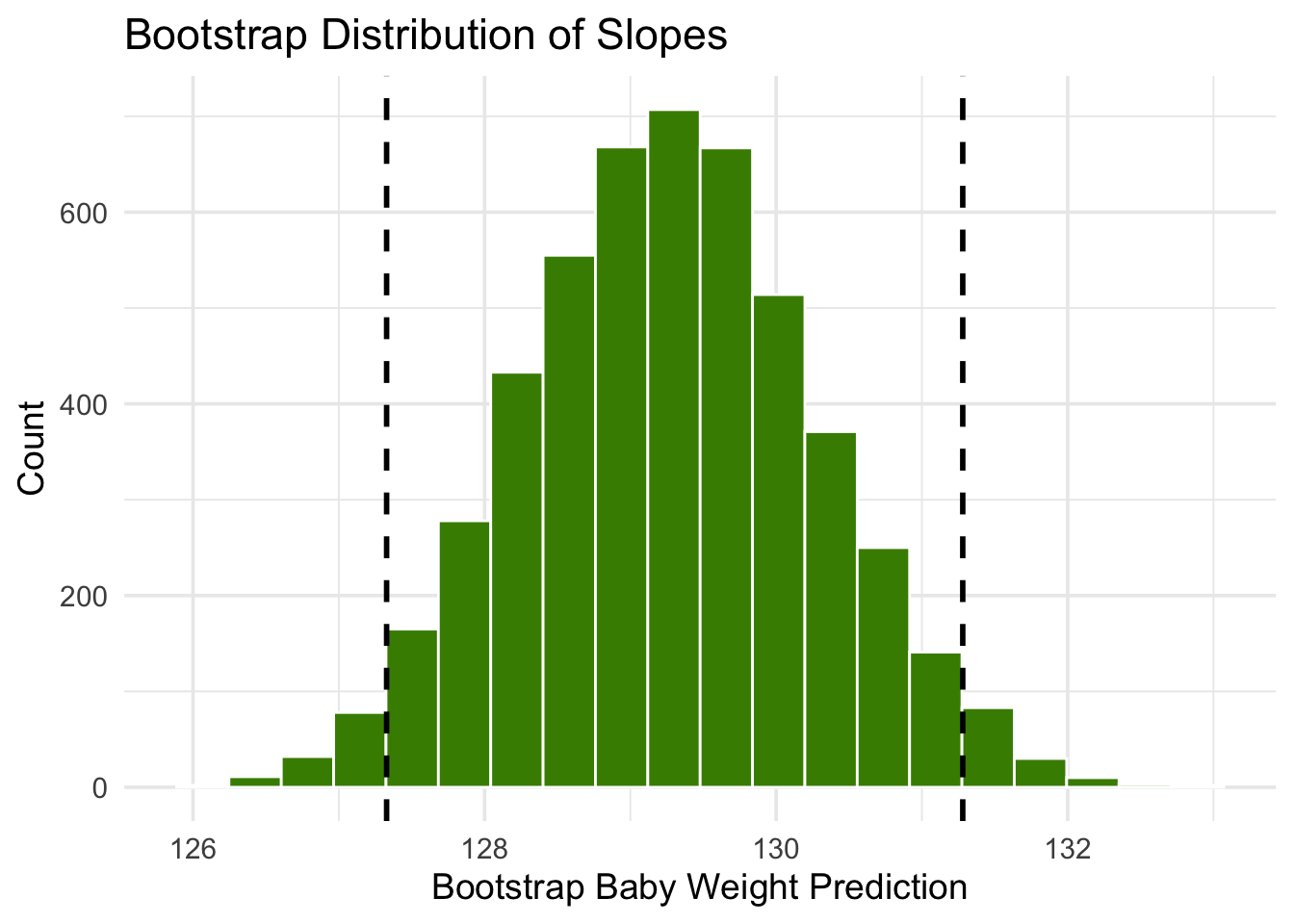

If we increase the number of repetitions of the resampling process, we can generate an empirical histogram of the predictions, \(\widehat{y}\). This will allow us to create an interval of predictions, using the same percentile method that we used create a bootstrap confidence interval for the slope.

In each repetition, the function bootstraps a sample from the original data with replacement. It then finds the predicted value of \(y\) based on the specified value of \(x\). Specifically, it calls the function predict to find the fitted value at the specified \(x\).

boot_dist <- baby |>

specify(`Birth Weight` ~ `Gestational Days`) |>

generate(reps = 5000, type = "bootstrap") |>

group_by(replicate) |>

nest() |>

mutate(

model = map(data, ~ lm(`Birth Weight` ~ `Gestational Days`, data = .x)),

yhat = map_dbl(model, ~ predict(.x, newdata = tibble(`Gestational Days` = 300)))

) |>

select(replicate, yhat)

glimpse(boot_dist)Rows: 5,000

Columns: 2

Groups: replicate [5,000]

$ replicate <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 1…

$ yhat <dbl> 128.0622, 129.5034, 127.1278, 130.2195, 130.2265, 127.8789, …ci <- boot_dist |>

rename(stat = yhat) |>

get_ci(level = 0.95, type = "percentile")

ci# A tibble: 1 × 2

lower_ci upper_ci

<dbl> <dbl>

1 127. 131.model <- lm(`Birth Weight` ~ `Gestational Days`, data = baby)

predict(model, newdata = tibble(`Gestational Days` = 300)) 1

129.2129

The figure above shows a bootstrap empirical histogram of the predicted birth weight of a baby at 300 gestational days, based on 5,000 repetitions of the bootstrap process. The empirical distribution is roughly normal.

An approximate 95% prediction interval of scores has been constructed by taking the “middle 95%” of the predictions, that is, the interval from the 2.5th percentile to the 97.5th percentile of the predictions. The interval ranges from about 127 to about 131. The prediction based on the original sample was about 129, which is close to the center of the interval.

1.3.3 The Effect of Changing the Value of the Predictor

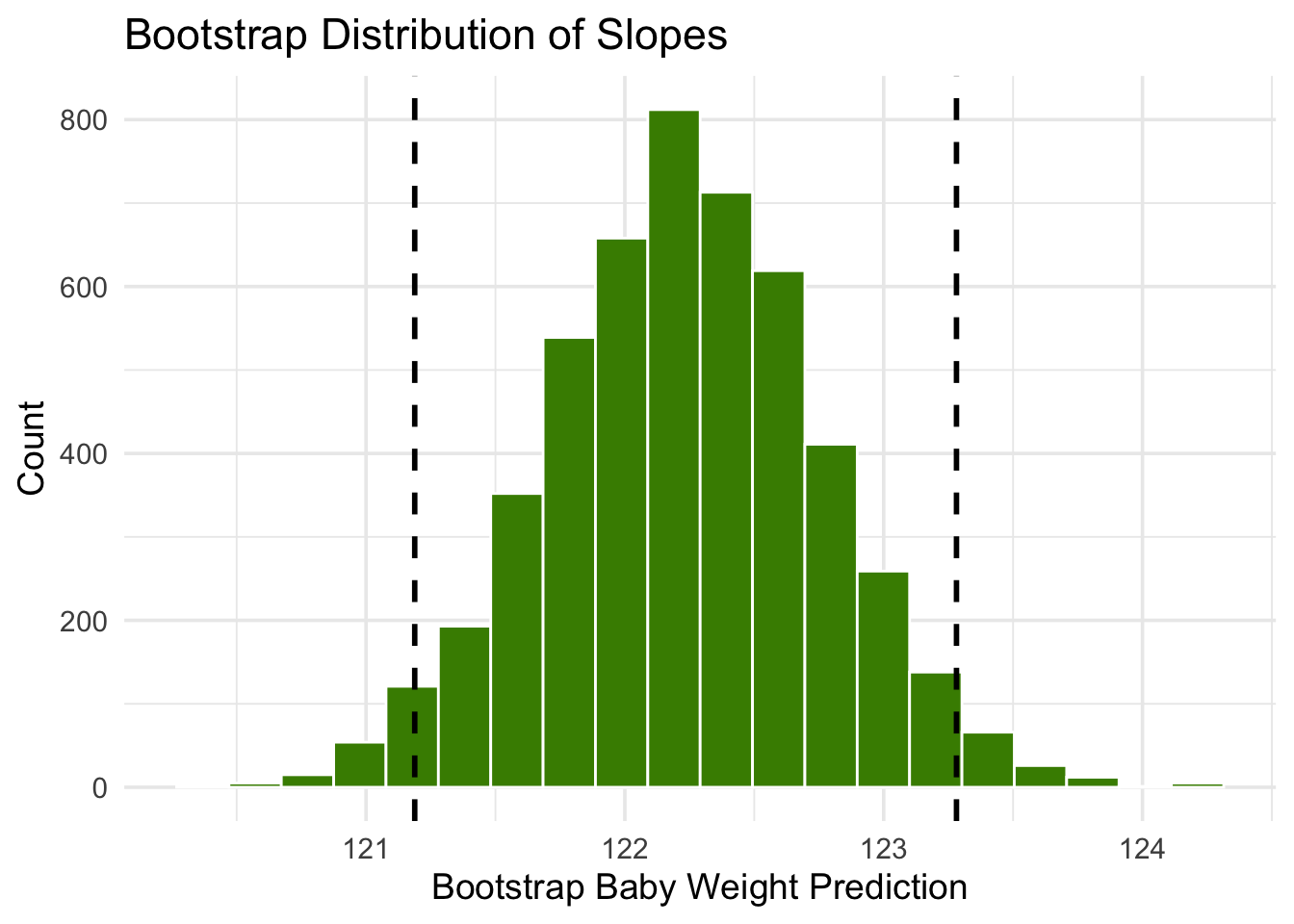

The figure below shows the histogram of 5,000 bootstrap predictions at 285 gestational days. The prediction based on the original sample is about 122 ounces, and the interval ranges from about 121 ounces to about 123 ounces.

boot_dist <- baby |>

specify(`Birth Weight` ~ `Gestational Days`) |>

generate(reps = 5000, type = "bootstrap") |>

group_by(replicate) |>

nest() |>

mutate(

model = map(data, ~ lm(`Birth Weight` ~ `Gestational Days`, data = .x)),

yhat = map_dbl(model, ~ predict(.x, newdata = tibble(`Gestational Days` = 285)))

) |>

select(replicate, yhat)

glimpse(boot_dist)Rows: 5,000

Columns: 2

Groups: replicate [5,000]

$ replicate <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 1…

$ yhat <dbl> 122.6142, 122.8532, 121.9305, 122.1799, 122.2894, 121.7549, …ci <- boot_dist |>

rename(stat = yhat) |>

get_ci(level = 0.95, type = "percentile")

ci# A tibble: 1 × 2

lower_ci upper_ci

<dbl> <dbl>

1 121. 123.model <- lm(`Birth Weight` ~ `Gestational Days`, data = baby)

predict(model, newdata = tibble(`Gestational Days` = 285)) 1

122.2146

Notice that this interval is narrower than the prediction interval at 300 gestational days. Let us investigate the reason for this.

The mean number of gestational days is about 279 days:

mean(baby$`Gestational Days`)[1] 279.1014So 285 is nearer to the center of the distribution than 300 is. Typically, the regression lines based on the bootstrap samples are closer to each other near the center of the distribution of the predictor variable. Therefore all of the predicted values are closer together as well. This explains the narrower width of the prediction interval.

You can see this in the figure below, which shows predictions at \(x = 285\) and \(x = 300\) for each of ten bootstrap replications. Typically, the lines are farther apart at \(x = 300\) than at \(x = 285\), and therefore the predictions at \(x = 300\) are more variable.

1.3.4 Words of caution

All of the predictions and tests that we have performed in this chapter assume that the regression model holds. Specifically, the methods assume that the scatter plot resembles points generated by starting with points that are on a straight line and then pushing them off the line by adding random normal noise.

If the scatter plot does not look like that, then perhaps the model does not hold for the data. If the model does not hold, then calculations that assume the model to be true are not valid.

Therefore, we must first decide whether the regression model holds for our data, before we start making predictions based on the model or testing hypotheses about parameters of the model. A simple way is to do what we did in this section, which is to draw the scatter diagram of the two variables and see whether it looks roughly linear and evenly spread out around a line. We should also run the diagnostics we developed in the previous section using the residual plot.